Wimp Is Broken

Wimp Is Broken Wimp Is Broken

Wimp Is BrokenThe WimpInterface (WIMP = Windows, Icons, Menu, Pointer) is a broken concept.

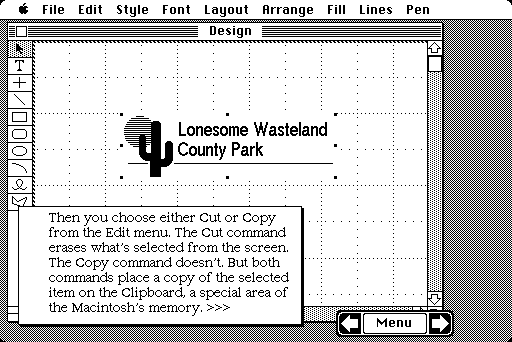

Contrary to popular opinion, it is extremely user-unfriendly, hard to learn for beginning computer users, once mastered hard to use. It's a slow and non-intuitive way to interface with a computer. If you think that you prefer keyboard shortcuts just because you're an experienced computer-user, wait until you see a complete computer novice approach the mouse (a.k.a. the "foot-pedal"). Especially when you try to explain them how to "double-click" on some vaguely Egyptian hieroglyph.

This is an attempt to short-circuit a lot of vague and unproductive argument below. If it works, the unproductive argument can be almost entirely refactored away.

A WimpInterface is a lousy substitute for an interface that's systematically designed to be usable in every regard. We shouldn't settle for Wimp; we may have to tolerate it but we certainly shouldn't settle for it even now.

-- RichardKulisz [more RK opinions follow in italics]

A human-driven rarely-updated imperative CLI is a lousy substitute for a self-driven continuously updated OO GUI.

Many people disagree.

{Well, honestly, they ought to admit it's logically true. If they need a self-driven continuously updated OO GUI, a human-driven rarely-updated imperative CLI would be a lousy substitute. OTOH, I usually only need a human-driven rarely-updated imperative CLI. When that's all I need, a self-driven continuously updating OO GUI could easily become a source for bloat and information overload (unless I can tightly control how much it self-updates).}

A programmable CLI is a lousy substitute for a programmable GUI.

Out of curiosity, can you name a programmable GUI? As opposed to a GUI that has a textual scripting language like REXX, which is really no better than the CLIs you seem so opposed to.

You know, it's not an either or matter. There's nothing wrong with text, just with CLIs.

So you can't think of any good examples that aren't just CLIs with lipstick? Quite diagnostic.

Hardly. I can't think of any good GUIs, period. And I really haven't studied graphical languages. I don't see what's wrong with a textual language so long as you can DnD live objects into source code.

Dialogs are lousy substitutes for tooltips.

I think tooltips are annoying. (To be fair, I also think dialogs are annoying.)

Tooltips suck but they're useful to demonstrate that clearly superior alternatives to dialogs exist which even idiots can take advantage of. Despite this, tooltips remain vastly underused.

And AmigaOs is underused solely because it's superior.

I wouldn't be surprised about AmigaOS.

Evolution is a lousy substitute for design.

Since some rather interesting programs and electronic circuits have been evolved rather than designed, this seems debatable as currently phrased. Maybe the intended point is that "accident of history is a lousy substitute for design"

Design gave us Brasilia, evolution gave us San Francisco. Where would you rather live?

You don't know much about urban design. SF was clearly designed, just not in a totalitarian style. The regular, rectilinear streets of SF clearly attest to this fact, which is blatantly obvious.

Rectilinear streets that occasionally go up 30% inclines. Great design.

Nobody said it was great design. Rectilinear streets are horrible design since they concentrate traffic around a few focal points.

If you're going to compare things then compare like with like, not apples and oranges. If you're going to compare a city that was designed without any concern for its users' needs like Brasilia, then compare it with one that evolved without any concern for its users needs, a medieval city like Paris before LeCorbusier. And if you're going to compare the products of genetic algorithms, compare them with expert systems and artificial intelligence. When you compare like with like, design wins every time.

I'm not clear what you mean here. Comparing genetic algorithms with expert systems is not comparing like with like. Genetic algorithms are used to generate novel solutions to problems where the solution is not known beforehand, and generally cannot be easily or more efficiently derived through other means. For example, GAs can be used to acceptably (though not necessarily optimally) solve scheduling and routing problems that otherwise might require an exhaustive search of the solution space. Expert systems are used to select a predefined solution based on inputs that do not map directly to the known solution, but that can be derived from a chain of related rules. These are very, very different! Finally, "artificial intelligence" is a term generally used to refer to the field of study that encompasses both genetic algorithms and expert systems. Unless you intend "artificial intelligence" to refer to some cognitive (or whatever) method that doesn't exist yet, when you write "if you're going to compare the products of genetic algorithms, compare them with expert systems and artificial intelligence," it's a little like writing "if you're going to compare the uses of airplanes, compare them with roller skates and conveyances that have wheels." In short, your point is meaningful in a sense -- and you're possibly right that design wins out wherever design is possible, but it isn't always possible -- but not the sense you intended, at least to a reader applying the usual meaning of the terms you use.

You have a good point. I would rather what can be designed actually be designed so I'd create a general system with tunable parameters and then run the parameters through evolutionary trials. But from your roundabout description of GAs as solving systems where inputs map directly to outputs, it seems that's exactly what happens. Given the hype around GAs a decade ago (and neural nets too) I'd gotten the impression they were trying to do more.

Superior knowledge and superior intelligence wins over mere guesswork every time. You can see that here; actual knowledge of urban design and the ability to control variables beats empty rhetoric and cherry-picked unrepresentative cases. The point here is that design wins over evolution because it brings knowledge and intelligence to bear on a problem, not mere guesswork and whatever convenient rhetoric (or slime in the case of biological evolution) happens to be laying at hand.

This presupposes that we know what the best solution really is. In UserInterface design, it is painfully obvious that we do not. Thus we need to try things, discard the failures, and improve the successes. That's known as evolution. AllUisSuck?.

Bzzzt, wrong. There is lots and lots of HCI research that hasn't been applied. And in any case, progress requires design, not mindless evolution as you seem to imply. For "success" and "failure" to have any non-evolutionary meaning (ie, it actually works or it's actually good, as opposed to it's widespread) then you have to use a designer's mind to analyze and evaluate the outcome. A catastrophic failure may be closer to success than something that's only slightly off. Or you can learn more from catastrophic failure than a mitigated success.

What I like about comparisons of evolution and design is when the evolutionist completely glosses over the failure rate of evolutionarily-developed systems. How many species have failed so far? And of the tiny fraction that remain, all you can say of them is that they haven't failed yet.

More specific to what's wrong with Windows, Icons, Menus and Pointers:

Does it make sense to drag down middlebies in order to better serve newbies? Maybe the next generation will learn enough in grade school to avoid most of the problems you describe. My 4-year-old son has adapted pretty well to WIMP.

[lots of stuff moved to WimpTestimonials]

ChristopherAlexander being a household name around here, we might learn something from cities. After all, these millennial artifacts are optimized for spatial navigation, and navigation is the ostensible purpose of GUIs.

Models of cities involve five basic concepts.

Computer interfaces are for task navigation, not for spatial navigation. The vast majority of tasks that people use computers for are not spatial in nature, and a heavy-handed application of the old metaphor onto the new often leads to kludgey things that nobody wants. 3-dimensional desktop, anyone? -- francis

I want a 3D desktop. I just don't want to use one with a 2D pointing device.

Navigation is spatial by definition. I wonder what it is you think users navigate in when you talk about "task navigation". Some abstract mindspace?

The vast majority of tasks I perform on my computer have to do with communication, information storage, organization and retrieval. All of those tasks would benefit from an orientational metaphor. Organization might be so much easier that I'd actually get around to doing it, instead of having tons of miscellaneous files cluttered every which way.

Well, most people seem to prefer them for whatever reason. I don't know why, that is just the way it is.

Most users have no choice whatsoever, so who is it that you're referring to by "most people"?

I noticed that people tended to prefer WIMP over DOS applications and VT-100 around the time Win3.1 came out. While it was not unanimous, there seemed to be a majority in my observation. Maybe the little pictures (icons) were more stimulating or something. I don't really know.

Choosing between a broken GUI and a thirty year old CLI. This isn't exactly giving people a choice.

True, it is not an ideal test lab, but they voted based on their existing experience.

[People still have a choice. Show them a GUI that's as much of an improvement over what they have now as the Mac was over dumb terminals and they'll switch.]

Only if that GUI can be run on a standard Unix machine with standard hardware (one and only one keyboard and 2D pointer). But of course, it can't. Let's look at some of the requirements for a good GUI:

There was a project on SourceForge called 3Dsia that tried to implement a good GUI based on standard Unix hardware. It took me very little time to realize that was a hopeless endeavour. The Unix architecture is broken and having to deal with that architecture created intractable problems. That project is now dead.

[I don't consider any of those requirements for a good GUI. Where are you getting them? Why 3D? And what good is any GUI if no one can run it?]

Unlike most people, I am prepared to switch to a different OS.

Why 3D?

First, because it provides more space to play with, so there's less clutter.

Second, because it provides elegant symmetries, like the sphere.

Third, because it's necessary to model some of the relationships in the system. You can't model a graph in 2D without edges intersecting, causing confusion about what's a node.

Fourth, because even Wimp is 3D, it has overlapping windows.

Hell, to the requirements above I could add several dozen others, including such things as OrthogonalPersistence and LoggingFileSystem. Because users need to be able to see into the past and revert to it at any time they wish. Also because LFS is the simplest way to implement orthogonal persistence. And the need for OP? Well, it should be obvious that no decent GUI forces the user to deal with irrelevant trivialities like the difference between RAM and hard disk.

I think anyone that uses a multitasking OS long enough comes to desire some sort of window based interface. Some way to switch visual contexts, at the very least. When the Mac came out I was disappointed that it didn't really multitask, but I dug the GUI. From then on most multitasking OS GUIs looked something like the Mac. They were WIMPs. Windows 3.1 was the first Windows where multitasking sort of almost worked. It was the best I could do at home after Commodore dropped the Amiga. Windows 3.1 was the first Microsoft OS I ever bought. I also bought my first Intel CPU just to run it. It had a Mac clone GUI and I still dig it. It's much better than what I had before. If something that much better comes along I'll use it. -- EricHodges

ScreenMultiplexor. There are several graphical variants.

I have started to make up CajjuxdySystem? (http://zzo38computer.cjb.net/cajjuxdy.htm), which is a new kind of computer; there is no mouse, only screen-pen for drawing and selecting points on the screen, and keyboard for everything else. And this new kind of computer solves all problems of old kind, including viruses. If it is too hard (like UNIX), of course somebody could make a program that does something like this:

Press the number of what you want to work on - (1) Email (2) Typing (word-processor) (3) Game (4) CD-ROMand to make it so people will not be confused, you make it so that either pressing the number on the keyboard, or putting the screen-pen on the number on the screen, will work.

Well, no. This was state of the art in 1962, when IvanSutherland created the amazing SketchPad system. But light pens had severe usability problems, which is precisely why DougEngelbart was even then working on an improved design, released in 1968 as the mouse, which is what you are seeking to replace.

It is useful to study history in order to avoid reinventing it, thinking that it is new, not noticing that the older history had its own problems, often worse than the ones you are trying to fix.

What if the real problem is that the screen is vertical instead of horizontal? The ideal interface may be a drafter-like table, but the table is the screen. Then a pen-like thingy may be less tedious. This wasn't possible with 1960's technology because the CRT bulk would bump into your legs. But now we can try it.

That was in fact part of the problem; it is very tiring to hold your arm up unsupported in mid-air all the time. So tablet PCs with stylus may yet prove to work better - but note that they have had mixed reviews since they were seriously introduced a dozen years ago, and then reintroduced just recently. We'll see - but I haven't heard anyone claim that they've been demonstrated to be far better, as yet.

Santayana's point about history remains.

Well, no this plan is use the keyboard mostly, the pen is hardly used unless you are drawing. I guess you can make the screen flip up and down, so if you are doing a lot of drawing you can put the screen down on the table; if you are doing typing, you can lift up the screen. Then you can use it both ways. Another idea would be if you don't want to use a pen, you can control by keyboard by pressing a key-combination (such as: SubSystem+Numpad/ to toggle mode, then use arrows on the numpad. ',' and '.' can be to enter X and Y numbers).

Alternatives

To know if something is broken, one generally has to compare it to something that is fixed. The only viable alternative mentioned is the "guided menu system" above. I used to program them extensively back in the DOS and VAX days. A slight variation is that those system often used the teletype "scroll" model, so the above menu would resemble:

----Menu---- 1. Email 2. Typing (word processing) 3. Game 4. Play CD-Rom Enter choice: __The cursor would be at the bottom.

The GUI equivalent is the "wizard". So it is not a matter of GUI versus CUI, but whether the options are modal or not. I generally agree that model is often better for newbies. However, it is not very productive for those with more experience. The CUI version of non-modal would be commands that jump to other options. Example:

.... 3. Game 4. Play CD-Rom Enter choice: spreadsheetIn this approach, the user can type in something not on the immediate menu ("spreadsheet" in this case) and go there. The drawback is that that it requires more typing. GUI systems allow one to stick more options in the same screen real estate. While this may reduce typing, it can also create chaos.

I personally found that a well-designed CUI made me more productive than a GUI. But the problem is that they were often not well-designed, at least not for me. This brings up another issue. I fall into the EverythingIsRelative camp. If all the options were put into a data structure or database, then one could better tune it to his/her own needs. It is another case of SeparateMeaningFromPresentation. If the options were coded into a standard, then the presentation system can be swapped with different applications. One could use the presentation system that they are most comfortable with even with applications that have not come out yet (as long as they use the same meta standard). This may also be useful for the handicapped.

Applications would come with a database (or XML) of all their options. The "browser" for all these options would be built independently of the application (although could be included as a default).

For a simplified example, think of keyboard mappings. Every application has its own proprietary keyboard mapping system. If a standard could arise, then one could use the same keyboard mapper on different applications rather than each app reinvent its own. It is basic InterfaceFactoring.

Broken and useful

While one might argue about the brokenness of something, it can also be pointed out that most things are broken in one way or another. A real test then is not whether or not it is broken, but rather: Is it UsefulUsableAndUsed. When it passes this test of utility, argument about its brokenness can be pointed to the cold, hard reality, that people (millions and millions) have and do use this "broken" thing because ItWorks. When a product is developed so that it provides utility and functionality, it passes a test based on usability. It may fail the test of theorists and concept purists, and lack beautiful and pure structural component integration, and produce many "failing tests" when rigorously tested and subjected to all possible configurations and possible scenarios, but succeed in getting the job done that a user requires, producing a proper and accurate result as a result of proper and accurate inputs and requests. Those who use this "broken" concept do not argue about how correct its internals and its concepts are, they just want something they can use to help them get their job done speedily and correctly.

Those who are concerned with its brokenness and desire to produce something more pure or correct are totally free to do so. But they must remember that for people to "buy into it", it must pass the test of being UsefulUseableAndUsed and do so in a manner that its users can say "ItWorks". -- DonaldNoyes

I don't really get your point. It's obvious that WIMP works well enough to be in daily use by a couple of billion people these days. However it also has well known flaws, which most people never even think about. A small number of people say "hey, we're all too complacent, come on, let's think of something better!" And your reply is, it's good enough, shut up until when and if you come up with something better? I don't see the logic in that. -- DougMerritt

Personally, my problem is less with people who're addressing or just talking about problems with current interfaces, as it is with people who like to post long, invective filled rants like the ones on this page, without presenting alternatives. Saying "Burn all mouses" without providing an alternative means you're ranting uselessly, not that you're advocating useful discussion on interface design.

Interface design is hard, it's highly subjective, and it's a lot more complicated than "CLI good, GUI bad", or even vice versa. Much of the information here is incomplete or wrong - for example, the reason why televisions don't have multiple windows is because people don't multitask with them (except when they do, with picture-in-picture). Icons are used instead of text to conserve space, not because they're necessarily more readable. Menus aren't sorted alphabetically because consistent locations enable the use of spatial memory. That's why menu items are enabled and disabled instead of being dynamically created. One valid point is raised, which is the awkward and confusing naming of menu options, but it's not addressed - probably because it can't be, awkward and confusing naming can and will plague any interface you come up with. There's a disjoin between "power users" and keyboard shortcuts as well. Many power users don't use keyboard shortcuts - in fact, they use the the toolbar. There's a really good reason for this - keyboard shortcuts are optimal for people who can type. You don't need to move your hand off they keyboard to hit one. People who can't type aren't bothered by this and prefer to use the mouse. There are a lot of claims made about what users want or don't want, much of which is both unsupported and contradicts conventional wisdom, which is a good reason to simply discard it - my 4 year old understands the mouse just fine, but since he can't reliably read yet the keyboard makes little sense to him. How much more of a "beginner" user can you be? -- ChrisMellon?

Good points. But right there at the end, note that basic use of a mouse is one thing; that's just a spatial interface, and we're hardwired to interact spatially with the world. That's not the same thing as the whole WIMP kit and kaboodle. In particular I doubt that he's expert at left versus right click, nor single versus double click (and if he's using a Mac, there are similar issues). As for "beginner", that's part of the point: a common complaint is that too much WIMP design effort is aimed at beginners, yet being a beginner is inherently a temporary state - what about designing for power users? -- DougMerritt

I think, the point of DonaldNoyes is less, to shut up if it is good enough. It is, that if ItWorks, its not enough to say, that is is broken (that's ShiftingTheBurdenOfProof), even if it is. And its not even enough to point out a better alternative. One also has to provide a way from here to there. That's because Wimp is a local maximum and even if there is some better or even global maximum, people will be resistive to going there, if there is no smooth transition. -- GunnarZarncke

Assuming that's the point, then I disagree. Those points would apply to a business discussion ("shall we put our money into something Wimp-based, or into something no one has invented yet?").

They are irrelevant to purely technical discussions, where frequently one starts with a problem, or even just a hint of a problem, or a desire to improve things, and it frequently takes a long time to arrive at a solution that one can prove is in fact better. If one says "it's not enough to point out something is broken", then one shatters that process right at the start, leaving no possible way to move forward.

Some people are interested in processes that will eventually yield something new, others are only interested in final proven results, and that's fine - but the latter should merely ignore discussions concerning the former, since they're not interested, not critique that process in which they are not interested. -- DougMerritt

What is the starting point and focus for a design meeting the needs of a "power user"? Can we identify what components, methods or presentation formats would best suit such a user?

First identify users, capabilities and expectations

Power users expect:

Tradeoff Between SimplicityAndPower?

Much of the discussion on this page isn't about WIMP (three GUI widgets and one input device), but about higher-level mechanisms which are (somewhat) orthogonal to WIMP - things like context-sensitive menus, tooltips, what button does what, and other controls/topics that are germane to most if not all modern windowing environments (and thus assumed to be part and parcel of wimp).

But what are the alternatives to wimp? The CommandLineInterface is one - many programmers like this because the same mechanisms they use to program (the keyboard) are used for other things, allowing a high degree of composability of the interface. A good CLI is a powerful tool indeed in the hands of the experts. But WIMP has largely subsumed the CLI - with the latter augmenting the mouse inside a terminal window, rather than replacing windows and mice and such.

Beyond that? Not much. Windowing solves nicely the problem of multiplexing the diverse output of numerous systems into a fixed-resolution viewport (your monitor). Most of the alternatives to windowing (3-d environments, paged/tabbed displays, etc) are just solutions to the same problem - variations on the theme. Yet nothing has shown up to replace the graphical monitor as the primary means of interactive communication from the computer to the human. Printers and paper terminals aren't interactive; sound devices have much lower bandwidth than does the screen, and the other three senses have even lower bandwidth than our ears do. (Plus, effective transducers for taste and smell don't even exist).

Going the other way, what do we have? Keyboards. Pointing devices (mice, trackballs, joysticks, etc.). Writing recognition. Speech recognition. The first two are the primary means employed by CLIs and GUIs respectively. The third seems to be limited to PDAs and signature-capture devices (in the latter case, the signature itself is the signal, rather than the channel). The fourth still has lots of problems.

Granted, there are lots of things that can be improved about modern user interfaces; however it's important to distinguish the physical devices from the mechanisms and protocols that ride on top of them.

As of November 7, 2007 we now have PowerShell, which is bound to change how people look at this topic.

Wimp is Broken? Not Mine. And it just keeps on being more and more useful, useable, and used, not only be me, but also by hundreds of millions of PersonalComputer users. If something comes along that is more useful, I'll be one of the first to find out how it can make my computing experience better. -- DonaldNoyes

Apple understands the importance of location. Microsoft, not so much. Customizable menus in Office were an abomination mainly for that reason. So is losing your icon layout when you change screen resolution, I mean that's truly retarded. Mac folders remember their layout and icon positions, which you can sort of get Windows to do, but not reliably -- which is the other big Apple design skill: if you're going to put in a feature, make it consistent and reliable. Practice it in secret for a year like RickyJay? until you can pull it off invisibly.

Interacting with any modern WIMP system is like having a conversation with an amnesiac schizophrenic, or trying to get results from an oversized bureaucratic department. See GuiAsConversation.

<yawn> Somebody resuscitated this old beater? Why? This discussion is as dead as text-based menus.

20121028 - What's This?

"THIS" is a disaster.

Could this be Wimp? Except the pointer is touch the screen (you can still use the mouse however)

No, this is not WIMP. This is crap. Specifically, Metro crap from some gang of ditherheads who think a desktop is the same as a smartphone. Can you imagine grabbing your 700mm desktop monitor and using your thumbs to press "buttons" near the sides? Duh, der, dim. Microsoft "engineers" are "learning" from crApple. Dork.

No, but I will use a handheld control (remote) which will wirelessly perform all the necessary operations upon and within it whether near it or a thousand miles away. The appearance of that control will utilize fingers and thumbs upon icons and images on phone-like or pad-like devices without buttons switches or mechanical controls. They will use Windows, Icons or Tiles, moving and pointing control actions on menus serving as navigators and actuators.

Well, yeah. Just as I will use the icons on the "desktop" of my Galaxy S III (the phone that Apple wishes they had made) to do all kindsa stuff that I'd rather not type or menu to. But these are dedicated applications to do specific tasks on a limited device. The desktop computer is not a limited device aimed at specific tasks. By its very nature it is a broad spectrum solution looking for a problem to solve. The WIMP interface is suited to this nature. Metro is not.

To be fair, a lot of desktop environments have been going toward a similar design and Windows 8 simply has the 'Metro' or 'Modern' UI spin. Gnome/Unity has the new 'wall of icons' navigation style option, OSx has an ipad-as-a-desktop navigation style option, and now Windows. OS and UI have to evolve. Microsoft plays in both the desktop and mobile space and clearly wants to merge the two (see Surface tablet sold as essentially a laptop and Win 8 written for x86 and ARM with Mobile and Desktop same source). You are operating a windows machine with a 'windows user' mental model of 'oh i need all these advanced features' but what happens to the newer generation who grow up with iPads. MS must stay relevant. -gv

True, but if to stay "relevant" you mean to appeal to the masses who have the "ipad" generation "mental model", are you then saying the part of the masses who make the "buttons" and "tiles" work are not relevant? I am interested in what development processes are available which you can use to make old "operational" processes work without requiring a total change of how they work. Is there a SDK available which runs directly using the "Surface" tablet that makes this possible?

Huh?

The "Metro style" Start panel is a non-hierarchical menu, with live update of the large icons aka tiles. It's the same old Start menu, dumbed down for the FondleSlab generation. In some ways, it harks back to the box-of-icons style of Windows 3.x. The apps themselves are the same old WIMP interface, with flat-shaded decoration and touch capability. There's nothing to see here, folks. Move along.

ok

See: MetroStyleStartPanel, MiniatureFootprintComputing, TwoClicks

CategoryComparisons, CategoryInteractionDesign, CategoryUserInterface, CategoryRant

EditText of this page

(last edited March 1, 2014)

or FindPage with title or text search

EditText of this page

(last edited March 1, 2014)

or FindPage with title or text search