Big Ball Of Mud

Big Ball Of Mud Big Ball Of Mud

Big Ball Of MudFrom "Big Ball of Mud" by Brian Foote and Joseph Yoder (http://www.laputan.org/mud/mud.html):

[EditHint - link broke?]

[EditHint - link broke?]

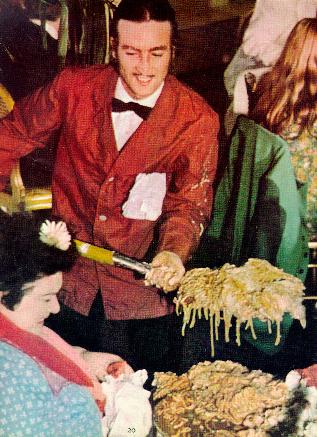

JohnLennon shovels on the spaghetti code for a much needed CodeReview otherwise known as a MagicalMysteryTour.

The full paper is available for download in PDF format at http://www.joeyoder.com/PDFs/mud.pdf

Contrast SoftwareCrystal. Also see JoelMosesOnAplAndLisp.

Also, take a look at SystemsAsLivingThings, WorseIsBetter, and YouArentGonnaNeedIt. It seems to me that there is commonality among all of these threads, something like ReactiveDevelopment?, but it is kind of nebulous to me right now. Anyone want to take a crack at it? -- MichaelFeathers

Reactive has a somewhat passive, knee-jerk feel to it. I've always preferred Opportunistic to describe the way that one seizes chances to make the system more reusable, general, and comprehensible as you address changing requirements. (Opportunity is the sunny alter-ego of Boehm's Risk driven approach, which amounts to kicking the nearest, nastiest wolf from your door first.) Rather than trying to get the architecture right up-front, and hitting some reusable abstractions while missing others, you glean these as the commonalities become evident, and refactor to give emerging abstractions distinct identities.

YouArentGonnaNeedIt seems to apply a little CreativeStupidity to say: pretend you are not as smart as you think you are, and wait until this clever idea of yours is actually required before you take the time to bring it into being. In the cases where you were right, hey, you saw it coming, and know what to do. In the cases where you were wrong, you don't have to dismantle the "spec home" you built that no one wants to move into, and that is in the way of the new road that needs to be built. In the cases you didn't see coming, well, you could say you knew about them all along too, but that would be dishonest.

Consolidating these insights late in the lifecycle is actually an inherently more conservative strategy than master planning, since it employs hindsight rather than foresight to drive architectural innovation, and, as we all know, hindsight can be pretty reliable. For this to work managers must be ready to acknowledge that design pervades the lifecycle, and that refactoring is what drives the evolution of better, more reusable objects.

This perspective is compatible with a basic notion of SystemsAsLivingThings, in the sense that they grow, evolve, change, and in the sense that they come to embody an increasingly more accurate model of a wider swath of their intended chunk of design space, learn.

BBoM talks some about what happens if there is no way for code to get better, and speculates that for some problems and organizations, BBoM may be a state-of-the-art strategy. It talks some about how to avoid or rehabilitate them, but leaves the heavy lifting in this regard to some older papers that can be clicked to from BBoM (which have a strong Redemption through Refactoring theme).

I like the way ExtremeProgramming tells the tale of how you don't make something general until the last nanosecond before you desperately have to have it. In practice, you might learn to see things coming a little sooner, but XP's story is easier to tell.

It's possible to go too far in portraying these approaches as merely reactive, since it takes insight, experience, and skill to discern and exploit the opportunities to make things better.

-- BrianFoote

I didn't mean "reactive" in a pejorative sense. I was really talking about the fact that the system growth happens in response to the environment rather than being elaborately planned.

Could there be a way that we can structure software to capitalize on the fact that without due diligence it becomes a BigBallOfMud. Sometimes natural processes can be used to our advantage. I know that it sounds insane, but if we are able to minimize the amount of structure needed to make elaborate software, could we not have something which survives entropic onslaught better than our current artifacts?

I have an idea about how this could be done, but I'm pretty sure that it is flawed. In any case, I find value in thinking about these things. I learn from where they fail. The idea I have in mind could either degenerate into functional decomposition, or just end up being no more useful than object-orientation. If I'm not pounced upon for putting forth a possibly flawed idea for discussion, I'll start on ChainOfResponsibilityEngine soon. This NoveltyVampire? does not want anyone to lay down any blood that they can not spare.

No more useful than object-orientation, eh? My experience has been that minimal structure is good when you are exploring the design space, and that structure emerges as well-worn paths are established and paved over. Mature frameworks and components are better able to resist being washed away by entropic tempests than are less securely anchored low-level code artifacts, or even applications as a whole.

Now, if what you mean about minimizing structure is minimizing the amount of work, or bookkeeping needed to bring structure into being, I'll buy that. I think one of the reasons that iterative/incremental/evolutionary styles of programming are taken for granted in the Lisp and Smalltalk worlds, and are still largely unknown in the C++ world (the jury is out on Java) is that you don't have to change declarations in as many places to refactor in these languages. Hence, culling structure from clutter is easier, and can be carried out with a minimum of premeditation (opportunistically).

Perhaps resistance to change (away from C++ to Lisp or Smalltalk) is a result of a LanguageChoiceImposesSocialStructure?

In keeping with the spirit of this discussion, decisions as to whether to pounce would properly be deferred until after we've had a chance to examine the material in question (to say nothing of sundown).

-- BrianFoote

I think a major failing of traditional development methods is the lack of refactoring. Without that, any system will fall apart over time due to maintenance, no matter how well designed and planned it was during initial development. I once saw a formal presentation showing how systems must invariably lose structure over time, due to maintenance, until they're unworkable and have to be rewritten. I don't believe it:

My experience has been that systems can be refactored over time to improve their architecture. Unless the business' basic model of operation changes, or there's a need to change computing technology, there's no reason why quality must deteriorate over time.

But no one teaches refactoring as part of development or maintenance; most methodologies describe a complete "life cycle" that ends with delivering a system, and append a comment to the end saying that ongoing maintenance is done the same, only with smaller cycles. It's generally assumed that everything will be done right the first time, and nothing will need to be changed -- ever! (...shades of OpenClosedPrinciple, for example.) This couldn't be farther from the truth.

Some extreme-ists may refactor all the time, to such an extent that it becomes the way they do design. But even if you're not doing ExtremeProgramming, conventional projects using outdated methodologies still need to learn the value of refactoring.

-- JeffGrigg

FutureDiscounting generally disfavors heavy refactoring. Besides, many managers have complained that they just have to throw out languages and start with new ones anyhow. If you have to toss it because the language if obselete, then why bother refacting all the time? After being burned multiple times, telling them that Java or Foo-bar language is the final and best language (and forever supported) won't go over well. The purge cycle allows them to start with a relatively clean slate and switch languages in one step. We live in a disposable business culture for good or bad. --top

While I can agree with you about 50% of the time, top, I agree with Jeff here. I tend to write what most would call procedural code, and it is tight, fast and small; but it would be problematic if not unmaintainable if I didn't use a sort of refactoring at every change. My refactoring KEEPS my code tight, fast, and small. --LlewelynThomas

FutureDiscounting doesn't come into play unless you speculatively refactor to meet unknown future requirements. Refactoring to prepare to satisfy new but already known requirements is the kind of refactoring promoted by the XP guys and by Martin Fowler's Refactoring.

Personally I find Refactoring easier to "get right" than scratch-coding. Translating a well-factored program into a new language is easy; writing from scratch is liable to introduce new bugs. --nn

See AmorphousBlobOfHumanInsensitivity, http://www.xpsd.com/RefactoringDemo (BrokenLink 2004-04-08), DeprecationRefactor, ExtractAlgorithmRefactor, RefactorLowHangingFruit.

A few days ago, I posted the a page on TheBlob - the two seem related. Are they the same? - SriramGopalan

Isn't XP 'reactive' by its nature?

Anyone want to propose some objective measures? Lack of OnceAndOnlyOnce may be one, but probably not the only. Lots of global variables, perhaps. But, cleaning up global variables will not necessarily reduce messiness. The EverythingIsRelative view is probably that all languages, paradigms, or techniques that are not my favorite are a BigBallOfMud, at least to me. I can envision this kind of exchange:

RedHat: X stinks, you should use Y.

BlackHat: I like X. What is wrong with X?

RedHat: X makes a BigBallOfMud

BlackHat: No it doesn't. Y is what makes mud.

RedHat: Prove it!

BlackHat: Just look at Y. It is hard to find anything.

RedHat: That is because you are not experienced enough with Y.

BlackHat: No, it is that you are not experienced enough with X to see that it is better.

EditText of this page

(last edited July 21, 2013)

or FindPage with title or text search

EditText of this page

(last edited July 21, 2013)

or FindPage with title or text search