Fuzzy Predicate

Fuzzy Predicate Fuzzy Predicate

Fuzzy Predicate(This was mentioned once before, but I cannot find it.)

A fuzzy predicate can be used to find the best match(es) based on proximity. For example, suppose we had a table like this:

A:0.84, B:0.20, C:0.92, D... A:0.03, B:0.70, C:0.27, D... A:0.64, B:0.31, C:0.74, D... Etc...and want to find the 6 closest matches based on these values:

SELECT TOP 6 * FROM foo WHERE A=0.7 AND C=0.2An exact match is not required, but the 6 closest matches are returned. In this data set, the factors are normalized to be between zero and one. Another potential limiter "clause" is the best matches within a given amount of time. For AI use, the time limit may not be known in advance. One may need a way to supply an interrupt signal and ask, "give me your best stuff so far". Fancier versions have importance weightings.

-top

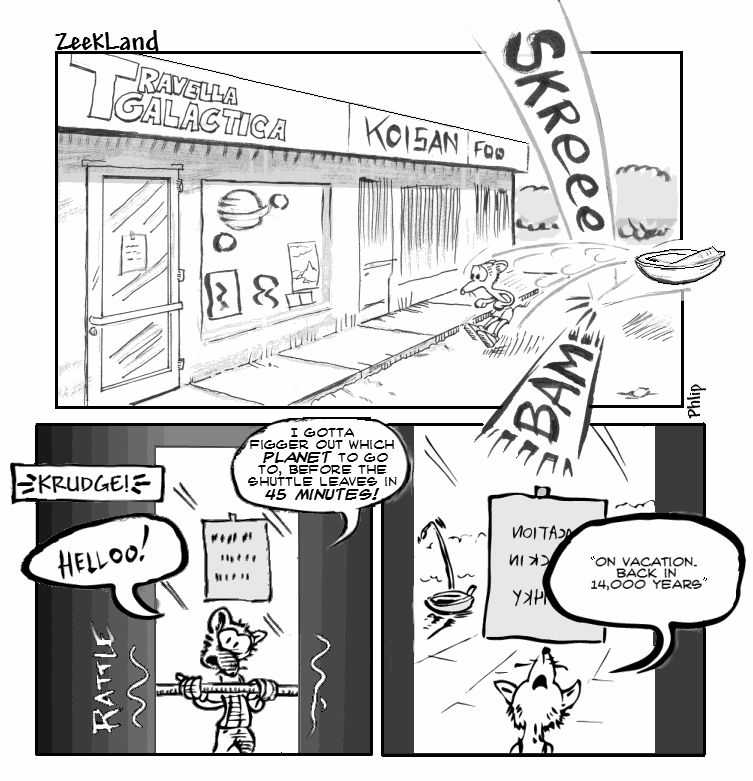

awesome. --PhlIp.

http://zeekland.zeroplayer.com/The_Elaborate_Art_of_Play_Part_1/5

See EuclideanProximitySearchEngine

How about "LIKE", "Wildcards", "Random", "Less Than", "Greater Than". Databases generally have these proximity checkers. Like the brain, the database either returns a result or doesn't... if it returns a fuzzy result back like a number between one hundred and two hundred (even though we wished it could have been more precise), it is still a result. If the brain cannot answer a question and says "maybe, I do not know" then this can be represented as a string called "maybe", or an enumeration (yes, no, maybe), or even heaven forbid a null.

No. Most off-the-shelf DBs don't have support for multi-valued proximity.

This is not a yes or no question (not asking for clarification, it was a rhetorical question to make you folks think for a minute about what databases do offer.). Databases can check for less than, ranges, greater than, LIKE, wildcards. These give approximate results - they are still definite results but if you think about it.. every thought we have is definite too, not in that it is a yes or no, but that it is a result that has physical/electronic presence in some form. For example when the brain searches for something that is "good" a database query could look for 6-8 out of a 10 using a range, or using less than and greater than. A nine or ten might be defined as excellent. A five wouldn't be that good. Several queries could also be placed into a temporary table and analyzed further for more approximations. Nulls are very fuzzy in a bad way.

That is because this is difficult to index in general.

I encountered this "fuzzy" issue when reading about "self-organizing maps" (SOM) for AI use, and wondered how to implement a similar contraption in an RDBMS. These seem closer to mimicking how humans think and retrieve memories than Boolean-expression-centric RDBMS. --top

How about ...

SELECT TOP 6 * FROM foo ORDER BY ABS(A-0.7) DESC, ABS(C-0.2) DESC... or ordering by whatever equation gives you a measure of 'closeness' that floats your boat, weighting A and C somehow. I'd much prefer something like that which expresses what I want rather than some DBMS implementor's notion of what "close" means. (This is perfectly feasible with current products.)

While it may produce the correct answer on some RDBMS, I doubt that can take advantage of indexes in most RDBMS, and thus require an entire table scan, plus some expensive sorting. Further, in Oracle it's very round-about to get the equivalent of "TOP X". "Rownum" usually doesn't apply to the sort stage, but chops before that, producing unexpected results.

I have heard that Oracle has some geo indexing special feature which might answer the above query efficiently - provided the index is set up properly. But this manual index setup seems to be what Top wants to avoid. An AdaptiveCollection would be nice that could infer/learn the necessary indexing automatically from the usage pattern and a laaarge library of possible indexing strategies.

I'd rather stick to something that at least somewhat resembles RDBMS. The indexing techniques are probably orthogonal to the query language or paradigm issues.

If the differences in the factors A and C have the same value, then a least squares function can be computed:

(A-0.7)^2 + (C-0.2)^2The smallest values of this will give the nearest points. -- JohnFletcher

Sure... relative to a line in a Euclidean space. 'Closeness' measurements require a distance function depending on the space. Consider the difference if C wraps around 0.0-pi, such that 0.2 is approximately equidistant from 0.4 and 2.94. Such things are somewhat arbitrary - not even the triangle inequality always holds for all spaces. Dealing with arbitrary clustering of data for DataMining purposes is the reason I came to the conclusion that something more generic, like a DataSpace, is better than relational when it comes to AI and learning systems.

As mentioned in DoesRelationalRequireTypes, for flexibility purposes, it would be best to define relational independently of the "domain math" such that things like "distance()" functions/operations would not have to be hard-wired into the relational engine and could be defined as needed. However, it may be difficult to optimize for such because optimization usually requires a fair amount of integration. At best a kit could be produced to allow custom relational engines to be built for special/different domains (like polar coordinates and AI) without starting from scratch for each domain. It would still be custom, but using mostly off-the-shelf or pre-defined parts for the construction. --top

The link below has an interesting description of "quad-tree indexing", which allows for improved physical-promixity indexing. It perhaps could be extrapolated to more factors than X and Y.

http://www.ebatty.com/exploiting_rdb_in_gis.htm

Indeed, it can easily be extended. 'KD-tree' is the common name for the general extension. See EuclideanProximitySearchEngine.

It's not clear to me if factors that are complete non-matches will mess this up. The first factor could be completely wrong, and even if all the rest are close, we'd never find the best, or even a good match. How are the outright dead-ends bypassed? A purely hierarchical approach can get tripped up by one bad branch. I can envision a genetic algorithm finding groupings or chunks of variables that are a better fit, but that could be computationally expensive.

This isn't a problem. If you are looking for all objects with certain criterion, then one failed criterion or match SHOULD mess it up. If you are looking for K-closest matches (where K is 100 for example - the 100 closest points), then you use a backtracking search if you either have not found K items or cannot make a guarantee that all points on a certain branch are too far away to beat the K in your current set. See EuclideanProximitySearchEngine.

CategoryRelationalDatabase, CategoryArtificialIntelligence, CategoryFuzzy, CategoryDataMining

EditText of this page

(last edited November 20, 2014)

or FindPage with title or text search

EditText of this page

(last edited November 20, 2014)

or FindPage with title or text search