Captcha Test

Captcha Test Captcha Test

Captcha Test

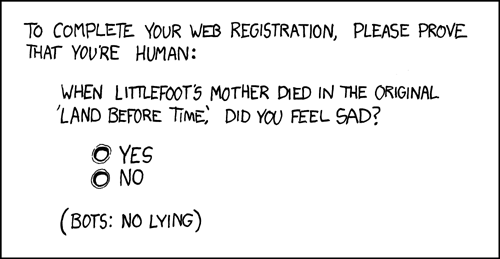

A "Completely Automated Public Turing test to tell Computers and Humans Apart".

Kinds of captchas:

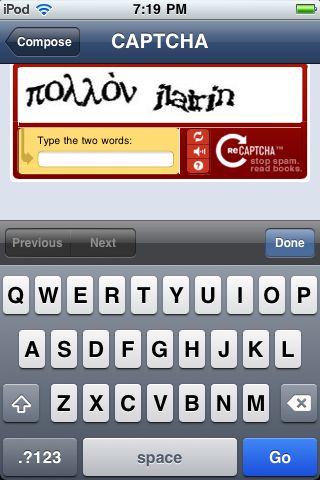

This is a cute idea, but what about the visually impaired? In practice you've lost some accessibility. It's just as bad for people with dyslexia - I probably have a mild case myself, as I'm notorious for transposing digits in numbers even when they're clearly printed; it's only worse when I can't tell whether that's a '6', a 'b', or a 'G'... -- CodyBoisclair ''True, yet there are efforts to increase accessibility: reCAPTCHA, for example, has a function in which you can listen to the CAPTCHA. I think that this is quite fantastic as accessibility on the Internet and on computers in general is very important.

It doesn't take long for the creative types to adapt to new technology; see Captcha Poetry: http://www.collisiondetection.net/mt/archives/2003/11/captcha_poetry.php

how captchas could be broken

Some progress has been made in software that can break a captcha: http://www.cs.berkeley.edu/~mori/gimpy/gimpy.html.

Given sufficient infrastructure, you can work around a CaptchaTest. You run a site offering something lots of people are willing to go to a little trouble to get; pornographic images are the usual choice. Then you make them solve captchas to get at whatever-it-is. Then you hook this up to your automatic spamming machine, or whatever else captchas are getting in the way of, and (provided you have enough people visiting your site often enough) you're done: your program doesn't need to solve them, it just needs to pipe them to people willing to solve them for you. Artificial intelligence is more expensive than natural stupidity. Others are using this concept of aggregate diversification for complex photo analysis. See http://en.wikipedia.org/wiki/Amazon_Mechanical_Turk.

However, if you dynamically generate the captcha image and limit access via Referer and similar, you also limit such schemes from happening.

Hardly... the point isn't that you forward the ns's to the captcha; rather, you grab the captcha, present it to the human, grab the response, and stuff it into the response field for the captcha. Timeouts on the captcha are worked around by having an appropriate amount of traffic.

Captchas are subject to man-in-the-middle attacks. Suppose I create a page with desirable content and do this:

Well, I would just put address of my site on the picture too, probably in another color. On my site there would be information asking to ignore it.

But they are worthwhile, even if they are not perfect

Captchas are one of those areas of ComputerScience that is plagued by computer scientists. Nothing is proposed without some egghead proclaiming that "I can work around it, so you can't use it, even though it is GoodEnough." People love to point out that your system isn't perfect so they can make a posting on comp.risks.

If a spammer on my site sees "Here are five numbers. Add 1 to the first number and type it here" he can't code up an automatic response to it without knowing the source or loading up lots and lots of pages and ReverseEngineering my routine. And even if he does, in 30 seconds I can add a dozen new variations, which multiply combinatorially to huge possibilities. "Add twenty to the smallest number." "One of these numbers isn't odd. Type its first four digits." "Ignore everything else here and type two dozen." I can always engineer faster than the spammer can reverse engineer, by orders of magnitude.

Captchas aren't a SilverBullet. But if you drive up the costs of the spammer even marginally, and have other defenses in place, he'll go somewhere else (or preferably out of business).

[The following discussion moved from WikiSpam.]

To get around spammers who target many wikis, perhaps put a number typing page in order to let specific IP's post. Example:

Below is to reduce spam 1. 42143 2. 88207 3. 97828 4. 16289 5. 38832 Please enter the value at #3 above to continue: _______The digits and which one to use would be randomly generated. Once an IP passes this test, it can post for say up to 1 hour after inactivity. As long as this specific technique does not catch on to other wikis, it won't be worth it to most spammers to script around just one wiki. Instead of digits, perhaps random wiki topic titles could be used to make it more friendly. -- top

Nice idea, but it doesn't work. A robot can just generate answers at random. One in five will succeed, letting it spam for an hour.

Who needs to generate answers at random? I could write Perl to break the scheme TopMind describes above in a matter of minutes. A more sophisticated kind of CaptchaTest is needed. Random topic titles is good, but better is a natural language question (e.g. perhaps, "Which is the odd one out? A. fish B. sheep C. harpsichord D. cat E. bird"). Making a computer understand natural language is hard. Of course, to avoid the random-answer problem you describe, something like rate-limiting for IPs generating a certain number of false answers may be required. Plus more complexity might be needed to reduce the odds of a random success, maybe two simple questions side-by-side. -- EarleMartin

{The value of custom script scraping a single wiki is probably not worth it to spammers. Spam has to deal with large volumes to be effective, and thus multiple wikis. If many other wikis adopt it I agree that it would probably be targeted. But I doubt they would bother with one. -- top}

Answering natural language questions is currently too hard for most computers, but if it's multiple choice, random answers still win. You need the potential poster to have to choose from an answer pool of thousands. You must ask them to type an answer that is not already in the question.

That there shouldn't be any answer for the machine to read is a very good point. I think the idea behind CaptchaTests as they exist is to be maximally accessible by as many people as possible and not machines (hence that wobbly-text you see when registering on some sites, which we can read and the machines can't - for the most part; I know some progress has been made in that area). If the user has to come up with an answer out of whole cloth, it increases the chance of some humans failing the test, doesn't it? I'm interested in the kind of test that presents you with the answer, like a series of pictures with the question "what are these pictures of?" (answer: fruit, cars, men, or other generic classes). They present a pretty big answer pool, I guess. -- EarleMartin

At this time bots seem unable to process natural language questions. Perhaps the bot writers have not considered the effort worth their time. The Word Press system wp-gatekeeper, which requires the system administrator to generate questions, is (to date) 100% effective. Questions like "In the ocean you will not find whales, tuna, water, camels, kelp or otters?" also provide a chance for some humor. -- MichaelRasmussen

If the answer is any part of the question then spammers can simply choose some part of the question at random. It will work often enough to defeat the system. They could even try the same edit multiple times, each with a different part of the question. Yes, we need a TuringComplete-type of test. Given the recent history of this wiki I would have no problem with asking questions that not all humans would get right. I wouldn't insist that contributors have a certain minimum level of intelligence, knowledge or competence.

Just use the same system that free email systems use, print out a word/number that is slightly mangled so that humans have a slight difficulty reading but for computers it almost impossible. If the human cannot guess it, he gets another chance. There are thousands of possibilities and unlike natural questions, non-english speakers can understand it (this is useful since certain pages can be in a different language, but the security is implemented on the system). -- ArtemusHarper?

This has been discussed extensively elsewhere - it discriminates against visually handicapped users. Computers can also solve this 80% of the time now, so it won't be a solution much longer anyway.

Why resort to a multiple-choice paradigm? There is an extension for Oddmuse, the Question Asker Extension (http://www.oddmuse.org/cgi-bin/oddmuse-en/QuestionAsker_Extension), that asks a natural language question that has to be answered in natural language. Maintainers can come up with any sort of questions and answers, e.g. "What is the capital of France?". One of those questions is picked randomly for each edit. This circumvents the problems of CAPTCHA and multiple-choice systems, IMHO. -- Manni

I am pointing out that what you are suggesting is not, by definition, a CAPTCHA. I am not saying that it won't work, but I do wonder where you will get your body of questions. If you allow people to generate them for you, how do you know that nearly everyone, including non-native speakers of English such as myself, will be able to answer them? How will you know that there is only one valid answer, or that you have found all possible valid answers? How do you know it doesn't contain a typographical error, such as your question does?

What you are suggesting may work, but I was trying to highlight some of its weaknesses so we could perhaps strengthen it. We might even be able to find a true CAPTCHA based on your idea.

[The following copied from DefensiveScriptIdea]

An unethical acquaintance of mine has been running them through image filters and OCR and gets about 95% correct. Admittedly, not everyone can do that, but programmers definitely have an advantage there.

2005-2-21

Good point. Let's get the terminology problem out of the way first. Since CAPTCHAs are supposed to be completely automatic, then one might say (if he would be inclined) that CPATCHAs cannot exist in the first place. Some person will have to think them up, some person will have to implement them, some person will have to install them, configure them etc. So in a really strict (and admittedly stupid) way, those images with numbers and letters on them are as much a true CAPTCHA as the questions asked by, e.g., the question asker extension for Oddmuse.

Viewed from a different angle, those CAPTCHAs aren't CAPTCHAs because you cannot use them to tell computers and humans apart. Artificial Intelligence hasn't improved much in the last 20 or 30 years, but looking at an image and extracting the numbers and letters on it is something that a computer is able to do these days (given the correct software, of course). Luckily, the correct software is big, fat, and ugly and spammers aren't able to use it in their bots yet.

I don't think that we need a system where the questions are devised by a machine. I'm sure somebody could come up with such a system, but I don't see why we should go to the trouble. If each wiki came up with its own set of questions that 99.9% of its users could easily answer, we would not have a spam problem. If a particular wiki came up with questions that could not be answered by non-native speakers, they would have made a mistake. But, ironically, it is the same mistake that people using graphical CAPTCHAs are already making all across the internet. They lock real people out along with the bots. If we don't care about the visually impaired, why should we care about those non-native speakers?

To summarize this: Whether your CS lecturer would call it a CAPTCHA or not is beside the point. The point is that there is an easy way to eliminate spam while keeping the whole thing accessible even for the blind and non-native speakers. -- Manni

And indeed, using domain-specific questions could bring another form of elitism to the wiki; a certain amount of domain knowledge could be required to edit, filtering out not only robots but perhaps some trolls. -- Brock

Still one of the traits of the common Web is visitor laziness. You will find that if you put your email address inside an image, or you force people to cut copy and paste your email addresses into their email client, you will get less email from your Web site visitors or customers, and less feedback. People on the net are lazy "buy it now", "click it now", "order now", "participate now" types.

Intelligent programmers or really interested customers are generally willing to put up with a bit extra work, though. But for the general customer or non-programmer, you'd be surprised at how many people will just leave and forget your website all together, if it isn't "easy to click" and "get things done now".

Examples

Google's is cute:  (refresh this page to see it in action)

(refresh this page to see it in action)

It seems to me that ASCII captchas are also possible for smaller-cheaper usage. Just put some random garbage in the image. Example "4":

.* .** . ** . ***** .** .* .*(Dots to reduce TabMunging)

Keep in mind that many captchas even manage to fail at being human-readable. I've had family ask me to help them read the letters of over-distorted captchas. Many of them might even stop a colour-blind person completely.

Excuse me if this is ignorant, but why are we devising CAPTCHAs that allow for the "visually impaired" when that particular group wouldn't even be able to read the very wiki they're supposed to be editing?

At the risk of replying to a troll, what makes you think visually impaired people cannot read a Wiki? Wiki pages are especially well suited to the visually impaired because they are information rich, simple, clean, and mostly text based. This allows standard text-vocalization software to work particularly well.

I'm visually semi-impaired and I love Wiki, not only because the content is interesting, but because it's "information rich, simple, clean, and mostly text based." In fact, my computer's "Fred" voice just finished reading the above two paragraphs to me. UseMod wikis are also easy on my eyes and brain, but WikiPedia is too visually busy for me.

Wiki's are mis-information rich, said the Cretan.

If a CAPTCHA prevents me from using a site, I vote with my 'feet'. Many a web-site has lost my traffic because I failed its CAPTCHA test. Using the web is meant to make things easier, but the CAPTCHA tests only add complexity (a nail in the www coffin IMHO). When I go, I don't come back in the hope that they have fixed the 'problem'; I am gone.

See: NameMangling, SpamDefenseRoadmap, TrulyHorribleAcronyms

EditText of this page

(last edited November 9, 2014)

or FindPage with title or text search

EditText of this page

(last edited November 9, 2014)

or FindPage with title or text search